False Positive Rate Confusion Matrix | In this situation, false positive should be as low as possible. You predicted that animal is a cat but it actually is not (it's a dog). A confusion matrix is a table that is often used to describe the performance of a classification model (or classifier) on a set of test data for which the true values are known. Create a confusion matrix in python & r. In machine learning and statistical classification, a confusion matrix is a table in which.

The confusion matrix itself is relatively simple to understand, but the related terminology can be confusing. Basic measures derived from the confusion matrix Target is positive and the model predicted it as negative. This results in the problems mentioned in the confusion matrix with actual proportions. We usually denote them as tp, fp, tn, and fn instead of the number of true positives, and so on.

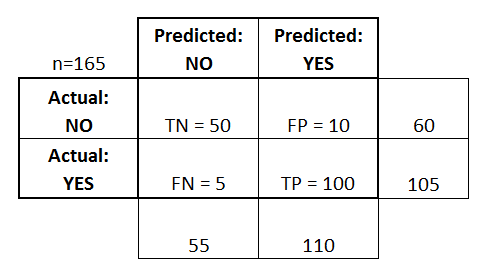

In predictive analytics, a table of confusion (sometimes also called a confusion matrix) is a table with two rows and two columns that reports the number of false positives, false negatives, true positives, and true negatives.this allows more detailed analysis than mere proportion of correct classifications (accuracy). Accuracy will yield misleading results if the data set is unbalanced; (tpr) (or recall) against the false positive rate (fpr), which we haven't. In this video we will be having a detailed discussion about the true positive rate, true negative rate, false positive rate and false negative rate. In this situation, false positive should be as low as possible. As you might have noticed, this is the same confusion matrix from the beginning of the article, just more nicely presented. Basic measures derived from the confusion matrix False positive (type 1 error): True negatives (tn) — 1943; In machine learning and statistical classification, a confusion matrix is a table in which. We usually denote them as tp, fp, tn, and fn instead of the number of true positives, and so on. This describes what proportion of patients with diabetes are correctly identified as having diabetes. False negatives (fn) — 344;

We can try and understand this graph by generating a confusion matrix for each point corresponding to a threshold and talk about the performance of our classifier: True positives (tp) — 181; Accuracy will yield misleading results if the data set is unbalanced; The confusion matrix itself is relatively simple to understand, but the related terminology can be confusing. True positive rate (true positive)/(true positive+false negative).

F p r = f p f p + t n False positive (type 1 error): 14 are correct and 5 are wrong. Confusion matrix is used to evaluate classification models. Many people are dazed with these names, e.g. In medical settings, sensitivity and specificity are the two most reported ratios from the confusion matrix. True positive rate and false positive rate you can use a confusion matrix to calculate the true positive rate (tpr) and false positive rate (fpr). In this confusion matrix, there are 19 total predictions made. The confusion matrix gives us insights into how the model works. Fpr = 1 is equivalent to predicting always positively when the condition is negative. This results in the problems mentioned in the confusion matrix with actual proportions. False positives (fp) — 32; True negatives (tn) — 1943;

In this confusion matrix, there are 19 total predictions made. We can try and understand this graph by generating a confusion matrix for each point corresponding to a threshold and talk about the performance of our classifier: Sometimes mistakenly thinking that the positive term is about the actual label of the data, so, be careful. True positive rate (true positive)/(true positive+false negative). 14 are correct and 5 are wrong.

Target is positive and the model predicted it as positive. True negatives (tn) — 1943; This results in the problems mentioned in the confusion matrix with actual proportions. (tpr) (or recall) against the false positive rate (fpr), which we haven't. And to boil it down, in the given matrix we have: A commonly used method to measure the performance of a classification algorithm is a confusion matrix. Accuracy will yield misleading results if the data set is unbalanced; The idea of making conc e pts as visceral as possible has been a new learning technique that i want to apply whenever possible. We usually denote them as tp, fp, tn, and fn instead of the number of true positives, and so on. Basic measures derived from the confusion matrix The formula to calculate the true negative rate is (tn/tn + fp). The four outcomes can be. You predicted that animal is a cat but it actually is not (it's a dog).

We applied the confusion matrix as it shows the false positive rate that we aim to reduce false positive rate. Cells in a confusion matrix that a bayesian is most concerned with.

False Positive Rate Confusion Matrix: Simple guide to confusion matrix terminology.

Post a Comment